Yesterday, I tested a tiny USB microphone for E.V.E, and managed to recognized the recorded sentence with the help of the wit.ai platform (even though the quality of the microphone was a little poor).

Let’s get a little deeper into wit.ai and see how it could be helpful for E.V.E.

Trying another USB Microphone

I received this morning the second USB microphone I ordered on www.dx.com. It’s a little more expensive (5.33 €), but still very cheap for experimentation purposes. It’s a lot bigger than the tiny one I used yesterday. It is flexible too. Once connected, it will face the user (contrary to the other one which is stuck in the back of E.V.E). Hopefully, it’ll provide better inputs for voice recognition.

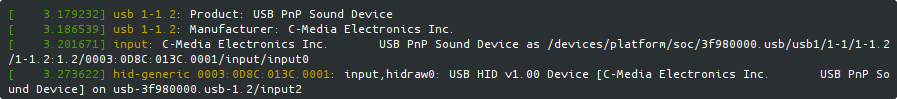

I hooked the mic to the RPi and booted up the system. The system logs traced the microphone as a C-Media USB Pnp Sound device, with a HID interface. Just like the tiny one:

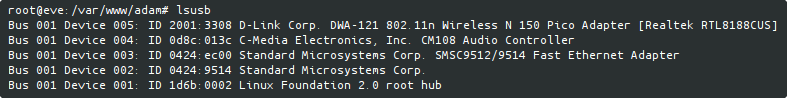

Running lsub command confirmed it :

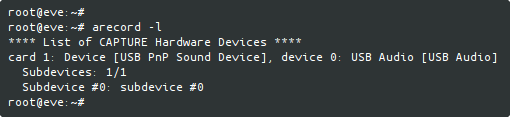

And arecord to find out the same card number and sub-device id:

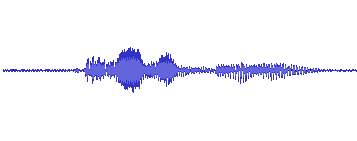

Without even cranking up the gain of the device with alsamixer, I recorded straight away a new phrase (saying “Quelle heure est il ? / What time is it ?”), a couple of meters away from E.V.E:

arecord -D plughw:1 -f dat -r 48000 record2.wav

I applied the same filtering process (trimming the record, boosting the volume and applying a low pass and high pass filter). It gave me a much better record than yesterday:

E.V.E is now looking a little funky, with some kind of ear pointing out on her left side. Some kind of old-style hearing aid.

But I guess it’s still ok. After all, it’s a prototype. I’ll see if I keep this microphone or the tiny one later on.

Playing with wit.ai

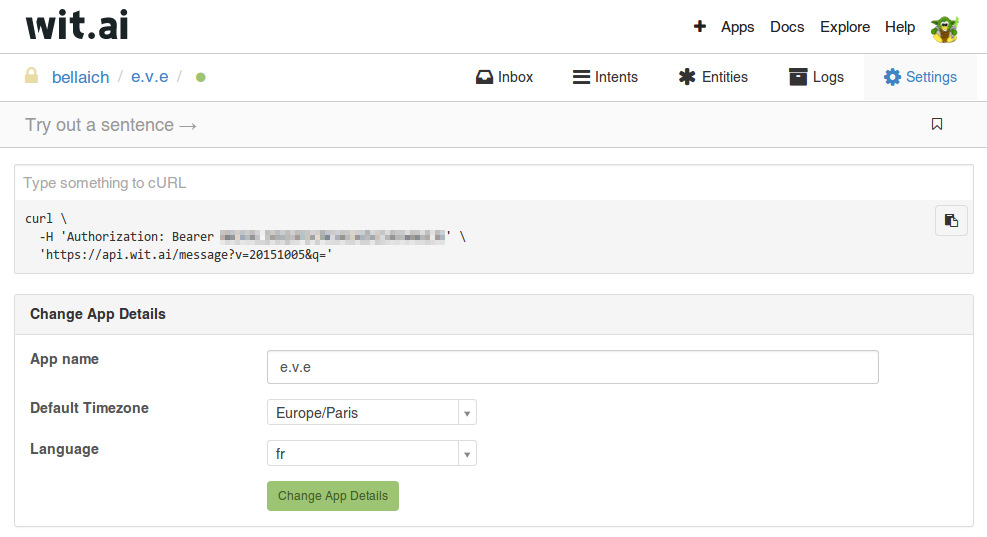

I used my account to log into wit.ai’s back-office, and created a new App named “e.v.e”. I selected “fr” as default language, since I want to interact with E.V.E in my native tongue (sorry about this, you’ll see a few french sentences in this post. I’ll try to keep things clear nonetheless) :

It gave me a new Access Token (blurred in the previous screenshot). I changed yesterday’s recognition script accordingly.

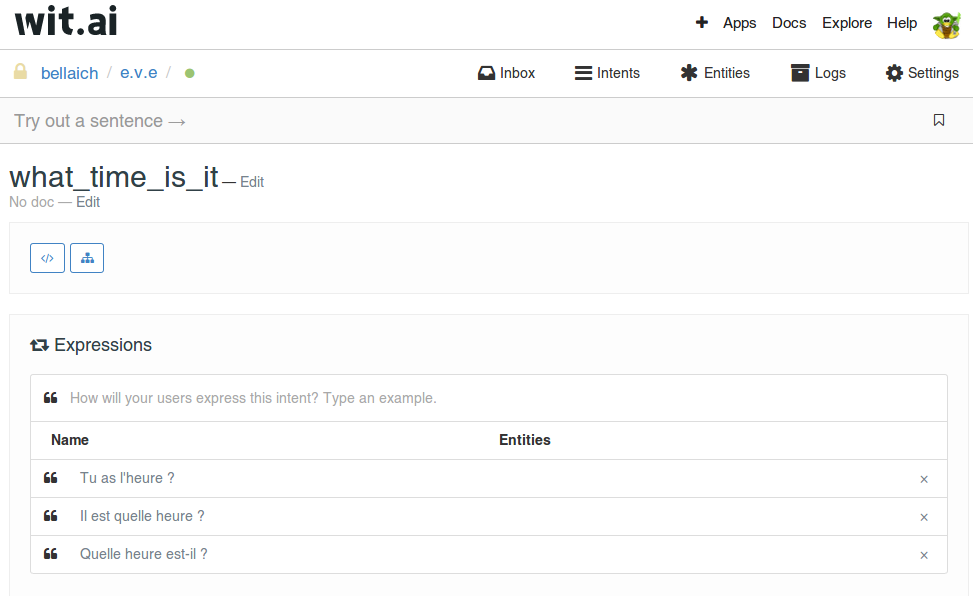

Then I created a new intent called “what_time_is_it” and associated three expressions (three different ways to ask for the time in French) to it:

- “Quelle heure est-il ?”

- “Il est quelle heure ?”

- “Tu as l’heure ?”

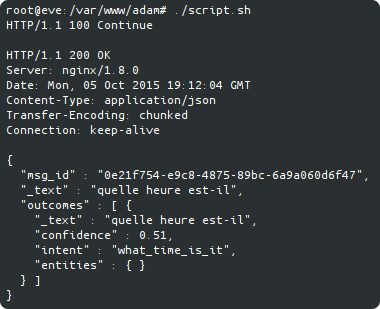

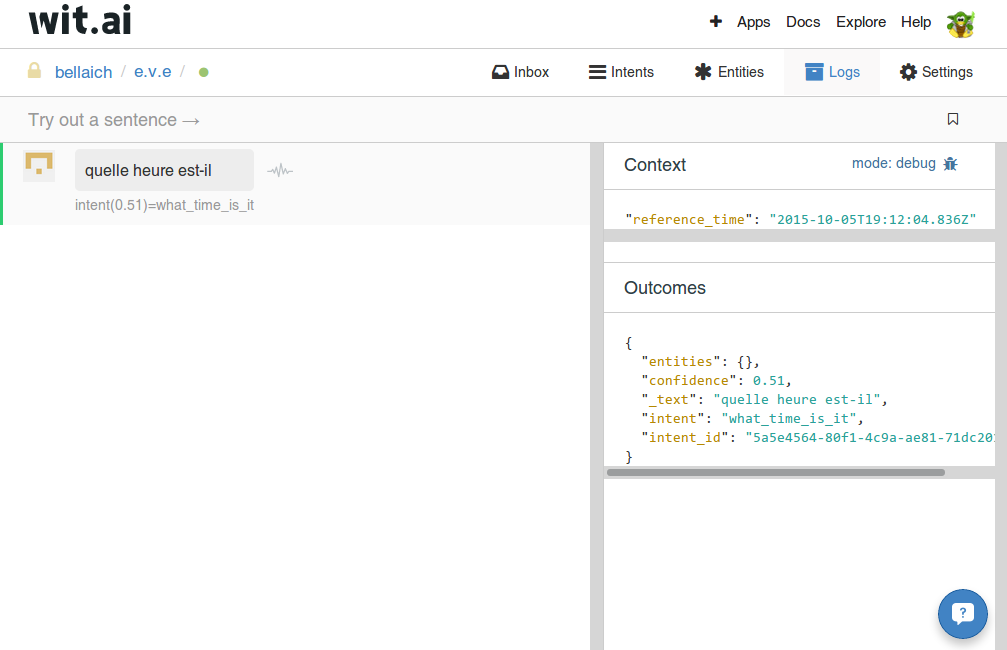

It tried this newly created intent with the expression “Quelle heure est-il ?” previously recorded with the brand new microphone. I ran yesterday’s script (after changing the Access Token to use “e.v.e” wit.ai App and recognize french speech). It got this:

Just like yesterday, it gave me back the interpreted meaning of the record ("_text" : "quelle heure est-il"). But this time, it gave me also a confidence level (0.51) and the recognized intent ("intent" : "what_time_is_it").

Nice !

It would have returned the same intent if the record corresponded to one of the other expressions (“Tu as l’heure ?” or “Il est quelle heure ?”)

You can also trace all this using wit.ai’s log system :

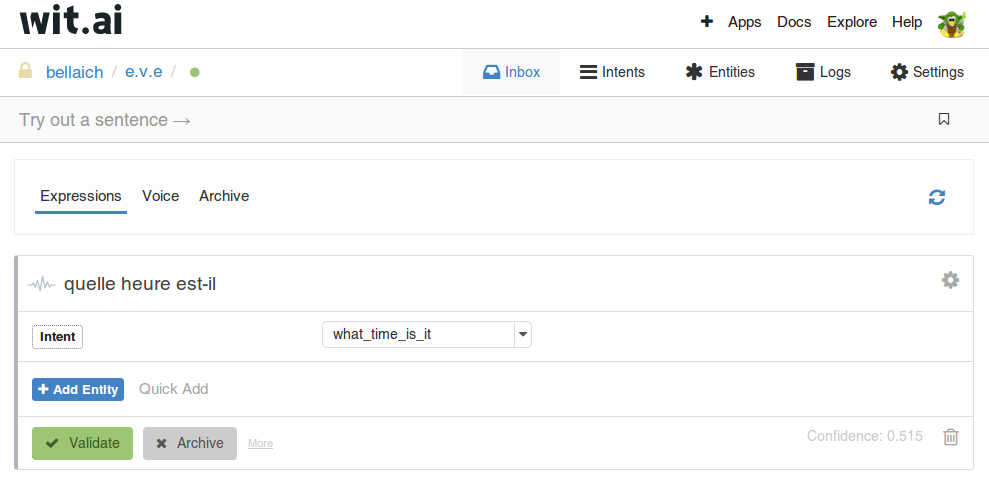

Of course, it’s not easy to create *all* the necessary intents. One nice functionality of wit.ai is the Inbox. It let you discover your needed intents: each expression sent to your wit.ai App by your users will end up in the App Inbox:

From there, you can then create the necessary intents from the collected expressions in your Inbox (or find other intents from the community). From the Inbox, you can also train your intents (by mean of validation or correction). And wit.ai will get better at understanding things.

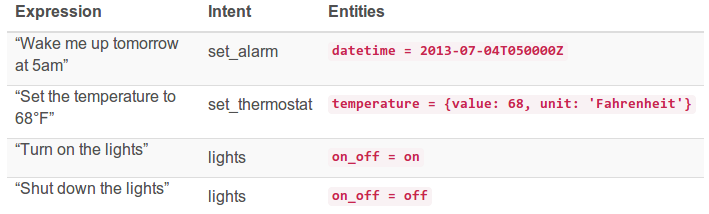

An intent like “What time is it ?” is easy to manage. There might be several expressions corresponding to the same intent (telling what time it is), but it is easily managed by associating different expressions to the same intent. Now, if the user says “Wake me up tomorrow at 5 am” or “Set the alarm to 5 am”, this adds another level of complexity.

It’s not enough to know that the set_alarm intent is concerned, you also need to capture the date and time at which the alarm should be set. This is where wit.ai “entity” concept comes to play:

In addition to determining the user intent, wit.ai tries to capture and normalize these entities for you (if you ask for it). You’ll get your entities back in wit.ai JSON result.

As you see in the lights example above, an entity value is not necessarily tied to a specific phrase in the sentence. on_off = off is inferred from the sentence as a whole, unlike temperature, which is inferred from the 68°F phrase in the sentence.

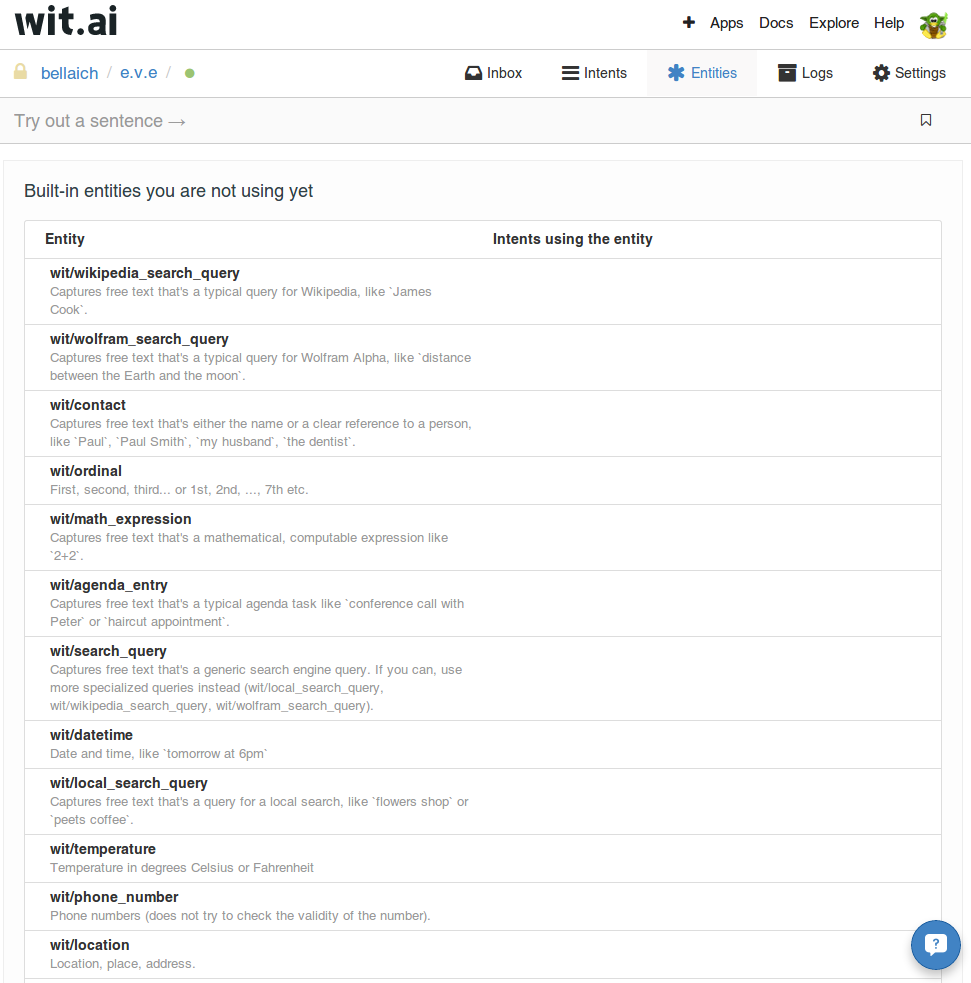

From wit.ai’s backoffice, you can add your own entity (enum-based, composite entities, ….) to any expression. You can also use built-in entities. Here is an excerpt :

This really looks good. I think I’m about to set my mind and select wit.ai for E.V.E voice recognition.