I spent part of my week-end finishing the voice recognition sub-system, tweaking E.V.E’s UX and cleaning up E.V.E / A.D.A.M code.

Let’s see how it went !

E.V.E loves snakes

E.V.E’s interface is based on HTML5/Javascript and runs inside a browser. I wasn’t too happy with my RPi’s browsers until I settled for Epiphany. Even then, I had to write a little script to simulate a F11 key press to run the browser in fullscreen mode.

And actually I didn’t really need a full browser. Since I was happy with Epiphany’s webkit, I decided to strip E.V.E to a bare minimum (so to speak …).

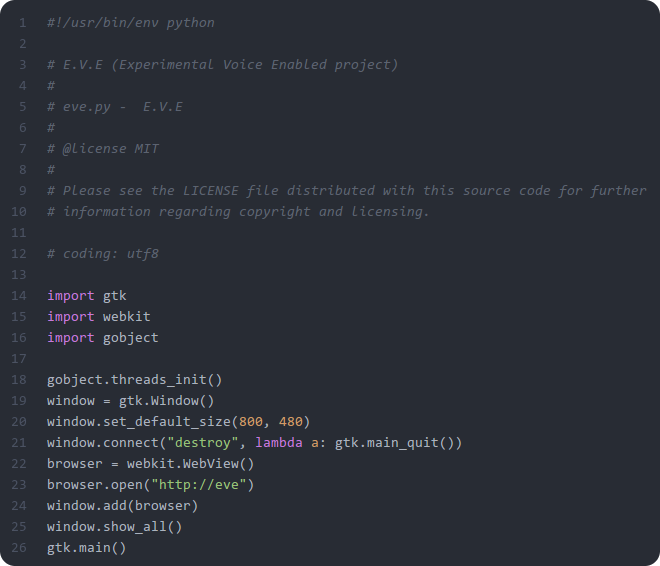

I wrote down a few lines of python and used the WebKit python binding to create a simple web view, the size of my RPi touchscreen (800 x 480):

Simple and effective. It runs E.V.E perfectly well and is much faster to launch.

The only drawback was the annoying presence of the Window Manager (OpenBox) decorations around the window. So, I edited ~/.config/openbox/lxde-pi-rc.xml and added these lines to remove all window decorations for “eve.py”:

<application name="eve.py">

<decor>no</decor>

</application>

E.V.E now boots up much faster, and the unnecessary clutter of a full browser is now removed.

Tweaking E.V.E’s interface

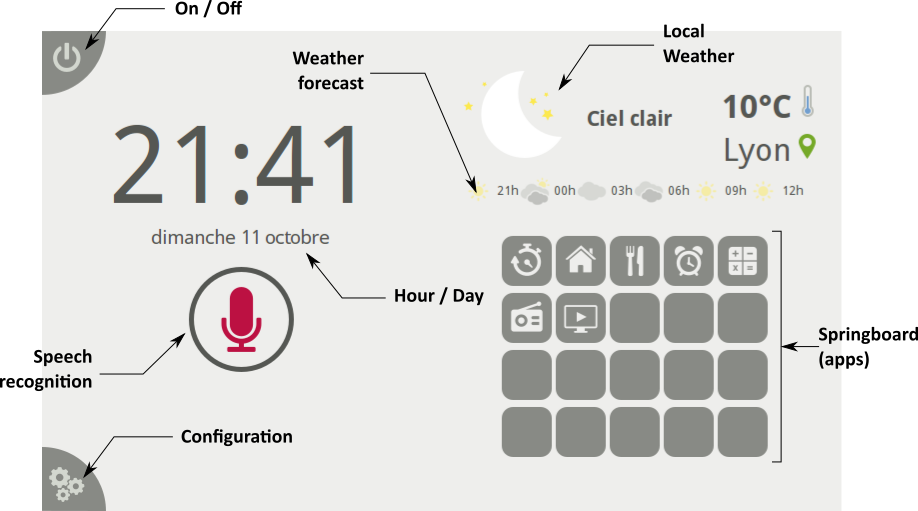

After a couple of weeks playing with E.V.E’s touch interface, I felt I had to tweak its UX a bit:

- I removed the “home button” for E.V.E home screen (with was useless) and dimmed it’s color a bit on other apps (Orange TV and Internet Radio)

- I removed the “information button” with was too small and quite useless

- The “configuration button” is much bigger (and thus more accessible). It now occupies the bottom left corner

- I added a switch button “On / Off” on the top left corner. When touched, the screen turns black (and returns “On” when the screen is touched back)

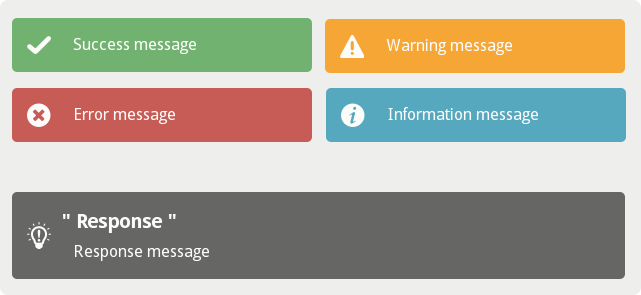

Notification system and error controls

Now that I am in the process of integrating voice recognition, I need a notification system to display contextual feedback. I settled on a non intrusive “toast” notifications. I created 5 different kind of toasts:

- Success feedback and messages

- Warning messages

- Error messages

- Information messages

- Responses

The first 4 ones are displayed on the top, while the last one is displayed on the bottom and occupies the full width of the display. It will be used for answers from the voice recognition system. The “success”, “warning”, “error” and “information” toasts disappear after a few seconds (or when touched). The “response” toast stays up until the user touches it.

The “error message toasts” allowed me to implement a few error controls that were dramatically missing. Here’s what it looks like (when I kill the A.D.A.M process):

Orange TV API

The Orange TV application has been split into two parts:

- The Orange TV application itself

- An API that can be used by the voice recognition system

A.D.A.M

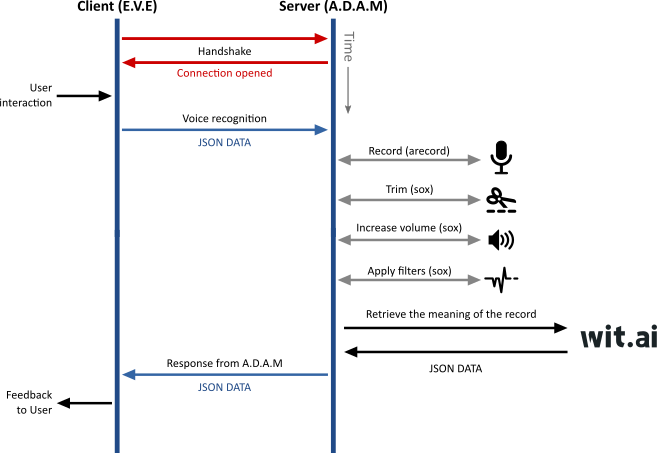

The E.V.E / A.D.A.M / wit.ai workflow has been implemented :

Since I’m using the biggest of my two USB microphones, the trimming / filtering process it not necessary. Actually, to speed up the exchanges between A.D.A.M and wit.ai, I downgraded the record quality to 8 bits / 8000 Hz / mono (instead of 16 bits / 48000 Hz / stereo).

wit.ai configuration and training

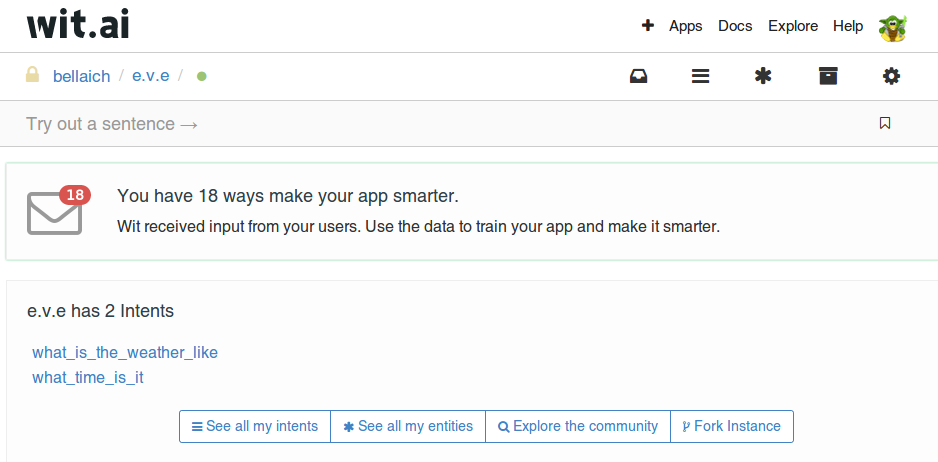

I was impatient to test the full recognition process, so I spent only a few moments on wit.ai’s configuration and training. I created only two intents (and no entity yet):

With so few intents, it means that, for now, the parsing process relies too much on E.V.E’s code. I’ll have to spend more time on wit.ai later on, and simplify E.V.E’s code afterward.

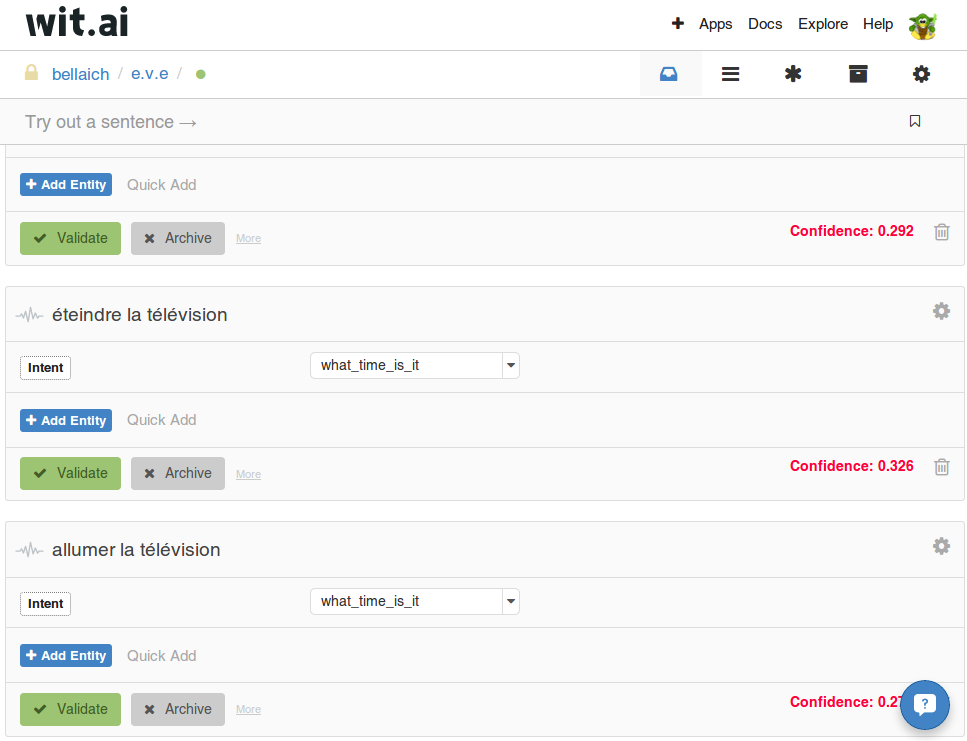

I tried a few recognitions and trained wit.ai on these (mostly, droping false recognitions and connecting to the proper intents):

Wrapping things up

It’s now time to wrap things up and test E.V.E brand new capabilities:

I must say that I’m very happy with it. It’s a bit slow (at least, slower than Siri or Cortana, or whatever is Google’s name for its Android speech recognition system), but it is working nicely. And I can’t turn my TV on and off with Siri anyway !

I created a Git repository, and I will push E.V.E / A.D.AM’s code soon (a bit of cleaning yet to do).

I was testing E.V.E from my kitchen’s bar. It gave me ideas. Let’s enjoy the moment and have a treat with a nice home-made pizza !